It’s easier to manage/secure since it’s essentially just shell scripts

I love the fact that I can’t tell whether this is irony or not.

It’s easier to manage/secure since it’s essentially just shell scripts

I love the fact that I can’t tell whether this is irony or not.

its only duty will be to spawn other, more restricted processes.

Perhaps I’m misremembering things, but I’m pretty sure the SysVinit didn’t run any “more restricted processes”. It ran a bunch of bash scripts as root. Said bash scripts were often absolutely terrible.

The default tier of AWS glacier uses tape, which is why data retrieval takes a few hours from when you submit the request to when you can actually download the data, and costs a lot.

AFAIK Glacier is unlikely to be tape based. A bunch of offline drives is more realistic scenario. But generally it’s not public knowledge unless you found some trustworthy source for the tape theory?

FWIW restic repository format already has two independent implementations. Restic (in Go) and Rustic (Rust), so the chances of both going unmaintained is hopefully pretty low.

Let me be more clear: devs are not required to release binaries at all. Bit they should, if they want their work to be widely used.

Yeah, but that’s not there reality of the situation. Docker images is what drives wide adoption. Docker is also great development tool if one needs to test stuff quickly, so the Dockerfile is there from the very beginning and thus providing image is almost for free.

Binaries are more involved because suddenly you have multiple OSes, libc, musl,… it’s not always easy to build statically linked binary (and it’s also often bad idea) So it’s much less likely to happen. If you tried just running statically linked binary on NixOS, you probably know it’s not as simple as chmod a+x.

I also fully agree with you that curl+pipe+bash random stuff should be banned as awful practice and that is much worse than containers in general. But posting instructions on forums and websites is not per se dangerous or a bad practice. Following them blindly is, but there is still people not wearing seatbelts in cars or helmets on bikes, so…

Exactly what I’m saying. People will do stupid stuff and containers have nothing to do with it.

Chmod 777 should be banned in any case, but that steams from containers usage (due to wrongly built images) more than anything else, so I guess you are biting your own cookie here.

Most of the time it’s not necessary at all. People just have “allow everything, because I have no idea where the problem could be”. Containers frequently run as root, so I’d say the chmod is not necessary.

In a world where containers are the only proposed solution, I believe something will be taken from us all.

I think you mean images not containers? I don’t think anything will be taken, image is just easy to provide, if there is no binary provided, there would likely be no binary even without docker.

In fact IIRC this practice of providing binaries is relatively new trend. (Popularized by Go I think) Back in the days you got source code and perhaps Makefile. If you were lucky a debian/src directory with code to build your package. And there was no lack of freedom.

On one hand you complain about docker images making people dumb on another you complain about absence of pre-compiled binary instead of learning how to build stuff you run. A bit of a double standard.

I don’t agree with the premise of your comment about containers. I think most of the downsides you listed are misplaced.

First of all they make the user dumber. Instead of learning something new, you blindly “compose pull & up” your way. Easy, but it’s dumbifier and that’s not a good thing.

I’d argue, that actually using containers properly requires very solid Linux skills. If someone indeed blindly “compose pull & up” their stuff, this is no different than blind curl | sudo bash which is still very common. People are going to muddle through the installation copy pasting stuff no matter what. I don’t see why containers and compose files would be any different than pipe to bash or random reddit comment with “step by step instructions”. Look at any forum where end users aren’t technically strong and you’ll see the same (emulation forums, raspberry pi based stuff, home automation,…) - random shell scripts, rm -rf this ; chmod 777 that

Containers are just another piece of software that someone can and will run blindly. But I don’t see why you’d single them out here.

Second, there is a dangerous trend where projects only release containers, and that’s bad for freedom of choice

As a developer I can’t agree here. The docker images (not “containers” to be precise) are not there replacing deb packages. They are there because it’s easy to provide image. It’s much harder to release a set of debs, rpms and whatnot for distribution the developer isn’t even using. The other options wouldn’t even be there in the first place, because there’s only so many hours in a day and my open source work is not paying my bills most of the time. (patches and continued maintenance is of course welcome) So the alternative would be just the source code, which you still get. No one is limiting your options there. If anything the Dockerfile at least shows exactly how you can build the software yourself even without using docker. It’s just bash script with extra isolation.

I am aware that you can download an image and extract the files inside, that’s more an hack than a solution.

Yeah please don’t do that. It’s probably not a good idea. Just build the binary or whatever you’re trying to use yourself. The binaries in image often depend on libraries inside said image which can be different from your system.

Third, with containers you are forced to use whatever deployment the devs have chosen for you. Maybe I don’t want 10 postgres instances one for each service, or maybe I already have my nginx reverse proxy or so.

It might be easier (effort-wise) but you’re certainly not forced. At the very least you can clone the repo and just edit the Dockerfile to your liking. With compose file it’s the same story, just edit the thing. Or don’t use it at all. I frequently use compose file just for reference/documentation and run software as a set of systemd units in Nix. You do you. You don’t have to follow a path that someone paved if you don’t like the destination. Remember that it’s often someone’s free time that paid for this path, they are not obliged to provide perfect solution for you. They are not taking anything away from you by providing solution that someone else can use.

I’m huge fan of Nix, but for someone wondering if they should “learn docker” Nix is absolutely brutal.

Also IMO while there’s some overlap, one is not a complete replacement for the other. I use both in combination frequently.

There’s also Rustic. It uses the same repository format as restic. It already has some pretty neat features and since latest release a ton of built-in backends.

They also mention Foreman. I like Ansible, but anything in combination with Foreman was terrible experience, so perhaps it’s that.

Also you can do terrible Ansible configuration that’s then nightmare to maintain.

Everything has its quirks. It’s quite hard to manage secrets in Nix without dropping them to nix store. Sops-nix is probably closest to ansible-vault, but I’ve yet to see some good solution for short lived secrets. (For example secrets that are only needed to deploy something but not to run it)

I’m curious. How would you identify who’s guest and who’s not in this case?

With multiple networks it’s pretty easy as they are on a different network.

I have a bunch of these myself and that is my experience, but don’t have any screenshots now.

However there’s great comparison of these thin clients if you don’t mind Polish: https://www.youtube.com/watch?v=DLRplLPdd3Q

Just the relevant screens to save you some time:

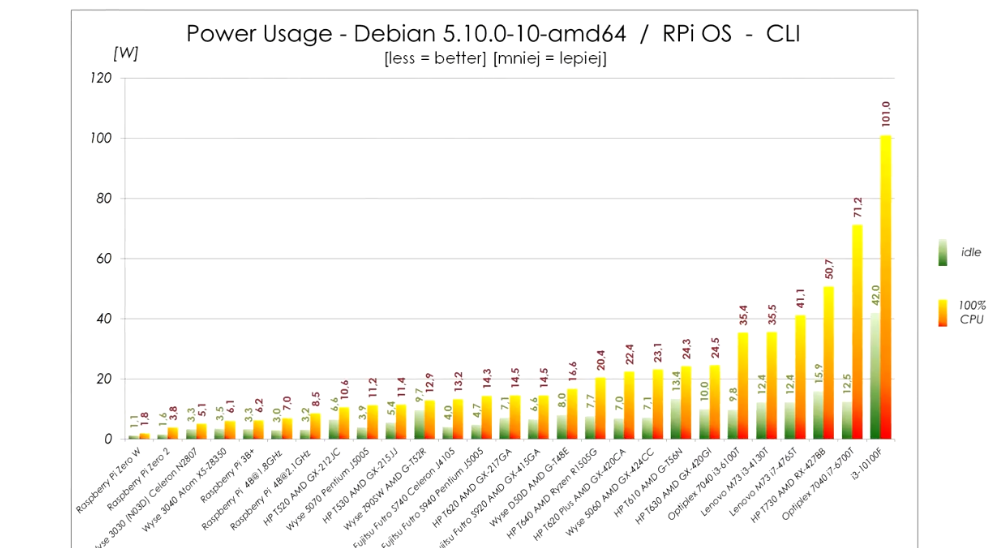

Power usage:

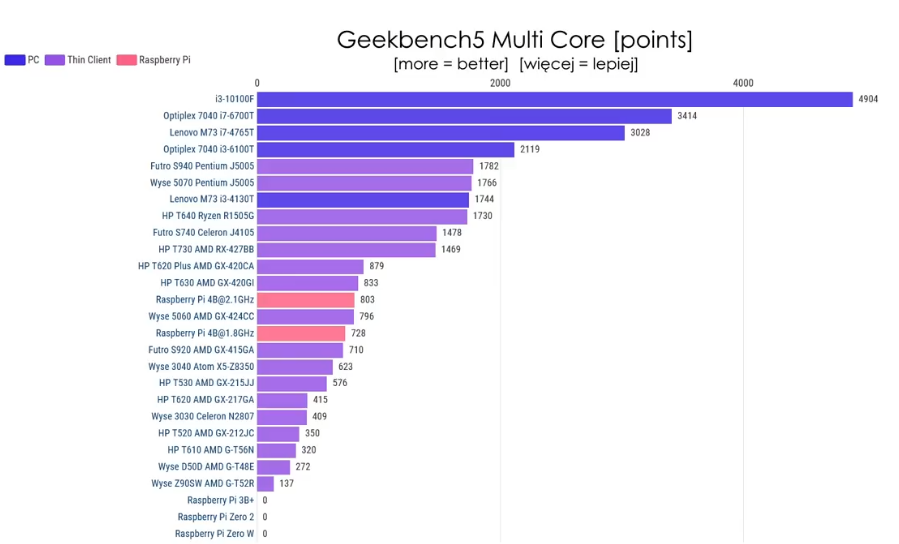

Cinebench multi core:

The power usage in idle is within 2W from Pi 4 and the performance is about double compared to overclocked Pi 4. It’s really quite viable alternative unless you need really small device. The only alternative size-wise is slightly bigger WYSE 3040, but that one has x5-z8350 CPU, which sits somewhere between Pi3B+ and Pi4 performance-wise. It is also very low power though and if you don’t need that much CPU it is also very viable replacement. (these can be easily bought for about €60 on eBay, or cheaper if you shop around)

Also each W of extra idle power is about 9kWh extra consumed. Even if you paid 50c/kWh (which would be more than I’ve ever seen) that’s €5 per year extra. So I wouldn’t lose my sleep over 2W more or less. Prices here are high, 9kWh/y is rounding error.

Thin clients based on J5005 or J4105 generally idle under 5W. (Futro S740, Wyse 5070,…) They consume a bit more when 100% loaded (11W vs 8W), but they also provide about 2x performance of Pi4.

(That article you shared is measuring power consumption on the USB port, which does not take into account overhead of USB adapter itself)

If you search ebay for Intel based thin clients, many are more powerful than RPi while being passively cooled and having very similar power consumption.

Agree. And this is the first OSS keyboard I’ve found that can do multiple languages at the same time without switching the language/layout. (You can set secondary language) It requires a bit of setup (like downloading dictionaries) but I’d suggest to give it a try.

Obtainium will check regularly for new versions and update automatically. So that’s definitely a benefit if you’d like to keep the apps updated.

As for Mull, you could add its f-droid link into Obtainium if you’d like to have all updates via a single app.

I feel so sorry for recommending a closed source app in this community, but Genius Scan from Grizzly Labs is the only non-oss app I still use. I think I paid around €30 for the enterprise version so it doesn’t bother me with cloud nonsense.

It’s all local only (if you want) and the scanning quality is the best I’ve found. (I used OpenNoteScanner for a few months, sadly it’s not even close both in terms of quality and convenience)

I figured I’ll mention it as an alternative to MS Lens app that likely sucks in every bit of information it can get its hands on.

That specific repository has no releases so it won’t work AFAIK. You need a repository with releases, that have apk attached. (Typically the developer would set up a CI workflow to build and attach apk for every release)

Edit: For example AuroraStore has releases with apks. So you can just enter gitlab repo for AuroraStore into Obtainium and it will install it and keep it updated.

But like, what kind of error are you gonna handle that’s coming from the DB, if it’s something like a connection error because the DB is down

I could return 500 (getting Error) instead of 404 (getting None) or 200 (getting Some(results)) from my web app.

Or DB just timed out. The code that did the query is very likely the only code that can reasonably decide to retry for example.

What kills Civilization for me is the phase in which I just wait for production or other stat to fill up and there’s nothing interesting to do for couple turns.

Civilization Revolution is kind of black sheep of the Civ family, because it’s “dumbed down” and “not a true civilization game”, but IMO it has much better pacing.